What Are ETL Pipelines? Breaking Down Their Importance to Your Organization

By Thayer Tate

Do you find it hard to keep track of scattered business data? Are you struggling to develop clear, actionable insights from your existing data architecture? You’re not alone. For organizations of all sizes, it can be challenging in today’s landscape to keep data organized. As companies grow, data is often collected from multiple sources, such as customer interactions, sales platforms, marketing tools, and operational databases. Without a structured way to consolidate and process this data, decision-making becomes inefficient, and opportunities can slip through the cracks.

This is where ETL (Extract, Transform, Load) pipelines come into play. Done right, they automate the process of gathering raw data, converting it into a usable format, and loading it into a centralized repository for analysis. Whether you’re managing financial reports, customer analytics, or operational metrics, ETL pipelines ensure your data is accurate, accessible, and ready to drive business success. In this blog, we’ll dive into why they’re important, helping you understand how to set one up at your organization.

What is an ETL Data Pipeline?

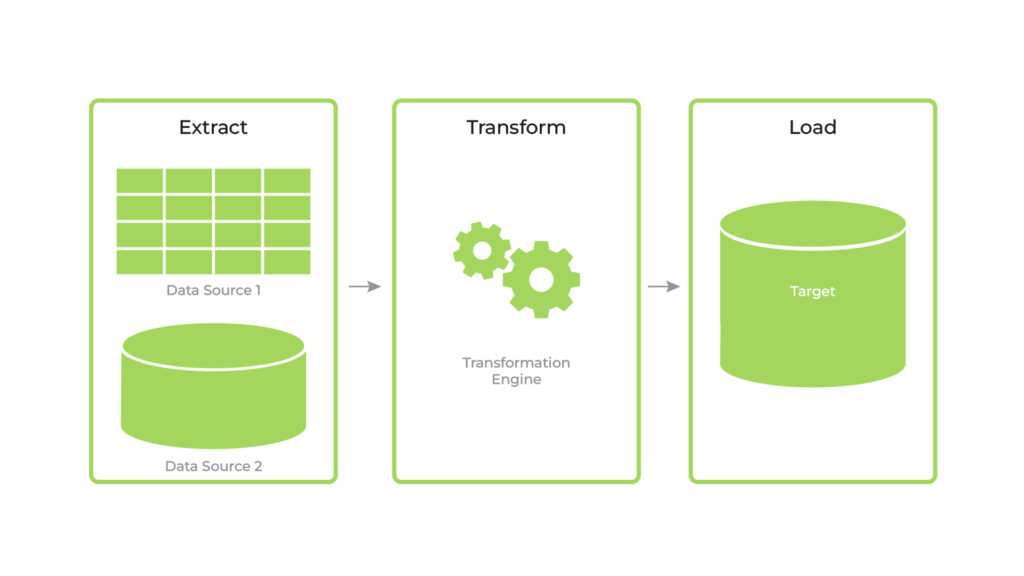

At its core, an ETL pipeline extracts data, transforms it into a standardized format, and loads it into a repository such as a data warehouse or data lake. This ensures businesses have clean, consistent, and ready-to-use data for decision-making. They help business leaders and their teams enjoy access to historical data that may have been tough to find before. A data pipeline example would be a batch-processing pipeline, which takes large quantities of data and re-organizes them into smaller, easier-to-read batches.

While ETL follows a structured process—extracting data, transforming it, and then loading it—there’s an alternative approach called ELT (Extract, Load, Transform). This process reverses the final two steps, loading raw data first and performing transformations later within a data warehouse. ELT is commonly used in cloud-based environments where storage is cheap, and computing power can handle transformations at scale.

How Do ETL Pipelines Work?

The exact steps of an ETL pipeline are carefully structured to ensure your data remains usable. Each step plays a critical role in maintaining the integrity and efficiency of your data.

- Extract: Many organizations need to collect data from multiple sources. The extraction phase pulls this raw data, including databases, APIs, spreadsheets, and cloud platforms. While it can be challenging to handle inconsistent data formats or manage large amounts of data without creating bottlenecks, a well-designed ETL pipeline can limit data loss.

- Transform: Next, you’ll need to convert your raw data into a usable format. Several data pipeline tools can help with this, including SQL, Apache, and Python. Once properly extracted, data often requires cleaning and restructuring to ensure accuracy and consistency. The transformation stage includes filtering errors, removing duplicates, aggregating information, and converting formats to align with business needs.

- Load: Finally, you’ll want to identify the best, centralized space to store your data. This could be done in AWS, Salesforce, Snowflake, or other data pipeline tools. Once you’ve identified the right data warehouse, you’ll need to choose between full and incremental loads and prevent any duplicate records from mixing in with your data. Once completed, this step opens up a world of possibilities for your organization, allowing you to report, analyze, and even use the data for machine learning capabilities.

What Are the Benefits of ETL Pipelines?

ETL data pipelines do a lot more than simply moving data from Point A to Point B. By automating the data integration process, ETL pipelines provide several key advantages:

- Data Accuracy: Gaining insights from incomplete data can be very difficult. A comprehensive pipeline applies standardization and validation rules, ensuring that only high-quality, well-structured data reaches business intelligence tools.

- Business Efficiency: Manually collecting and processing data is time-consuming and can lead to errors. ETL pipelines streamline this process, reducing human intervention and enabling faster, more reliable data movement. This is especially valuable for organizations handling large datasets across multiple systems.

- Scalability: As your business scales, you’ll likely generate higher volumes of raw data. Having an ETL pipeline in place can be game-changing, as it can accommodate your data needs without disrupting your existing workflows.

- Compliance: Organizations that need to meet requirements set by regulatory frameworks such as HIPAA or GDPR often have to present large sums of data. Without a proper hub established, it can be incredibly time-consuming to have to find every required data piece by hand. ETL pipelines help enforce security measures, such as encryption and access controls while ensuring compliance with industry regulations. This empowers your organization to make data-backed decisions as you grow.

SOLTECH: Helping Organizations Maximize Data Potential

Are you looking to create an ETL pipeline of your own? Do you need guidance for engineering and integrating your raw data? SOLTECH has helped organizations like yours achieve their data goals for over 30 years. Our team of experts can help you with everything from creating a data integration strategy to developing an ETL data pipeline to unlocking real-time insights. To learn more about our services, contact us today.

FAQs

What is an ETL pipeline?

An ETL pipeline is a data integration process that Extracts raw data from multiple sources, Transforms it into a structured format, and Loads it into a centralized system.

What is a data pipeline tool?

A data pipeline tool automates the movement and processing of data between systems. ETL tools specialize in transforming data, while broader data pipeline tools support real-time streaming, batch processing, and cloud-based workflows.

How do I build an ETL pipeline?

To build your organization’s ETL pipeline, you’ll want to first identify which data sources you’d like to integrate. Then, you’ll extract data with a data pipeline tool, transform the raw data, and then load it into a centralized data warehouse.

Thayer Tate

Chief Technology Officer Thayer is the Chief Technology Officer at SOLTECH, bringing over 20 years of experience in technology and consulting to his role. Throughout his career, Thayer has focused on successfully implementing and delivering projects of all sizes. He began his journey in the technology industry with renowned consulting firms like PricewaterhouseCoopers and IBM, where he gained valuable insights into handling complex challenges faced by large enterprises and developed detailed implementation methodologies.

Thayer is the Chief Technology Officer at SOLTECH, bringing over 20 years of experience in technology and consulting to his role. Throughout his career, Thayer has focused on successfully implementing and delivering projects of all sizes. He began his journey in the technology industry with renowned consulting firms like PricewaterhouseCoopers and IBM, where he gained valuable insights into handling complex challenges faced by large enterprises and developed detailed implementation methodologies.

Thayer’s expertise expanded as he obtained his Project Management Professional (PMP) certification and joined SOLTECH, an Atlanta-based technology firm specializing in custom software development, Technology Consulting and IT staffing. During his tenure at SOLTECH, Thayer honed his skills by managing the design and development of numerous projects, eventually assuming executive responsibility for leading the technical direction of SOLTECH’s software solutions.

As a thought leader and industry expert, Thayer writes articles on technology strategy and planning, software development, project implementation, and technology integration. Thayer’s aim is to empower readers with practical insights and actionable advice based on his extensive experience.