Data Optimization: Why It Matters for Your Organization

By Thayer Tate

When it comes to your organization’s data, its performance and accuracy are everything. Whether you’re building AI models, generating business intelligence dashboards, or running real-time applications, the ability to generate quality insights is only as good as the data infrastructure behind it. Yet, many organizations struggle with data that is slow, expensive to store, or unreliable for decision-making. That’s where data optimization comes in.

Data optimization is the process of improving the structure, cleanliness, and efficiency of your datasets so that they perform faster, store more cost-effectively, and generate more trustworthy insights. If you’re looking to build scalable systems, the ability to perform data analytics and optimization is crucial. Done right, this process can help you reduce costs while accelerating time to value.

In this post, we’ll discuss data optimization, core techniques you can apply today, and how platforms such as Microsoft Azure and AWS can help you along the way.

Why Data Optimization Matters in Today’s Market

Where optimized data can drive your organization forward, unoptimized data can quietly erode your organization’s performance and decision-making. Not to mention, unoptimized data sets can come with higher storage costs and poor reporting. Without proper data optimization, systems tend to accumulate inefficiencies that impact both performance and accuracy, including:

- Slow query performance that frustrates end users and analysts.

- Bloated storage costs that can balloon in the cloud, where you’ll be charged for every byte.

- Dirty or inconsistent data that undermines your team’s trust in analytics.

This is especially critical in AI and machine learning applications, as they’re often sensitive to data quality. Poorly optimized data degrades model performance, introduces bias, and increases the time it takes to train or retrain systems.

Ultimately, data optimization ensures your tech stack isn’t just operational, but also strategic. It lays the foundation for healthy, scalable systems, including AI.

Core Techniques for Data Optimization

Achieving high-level data analytics and optimization is less about a single tool and more about the right strategy. Here are five techniques that can boost performance, reduce cost, and improve accuracy:

- Data Cleansing and Deduplication

Before any advanced analytics, you need a clean foundation. Removing duplicates, null values, and inconsistencies ensures you aren’t drawing insights from flawed data. Tools and examples of this data optimization step include Azure Data Factory, which uses data flow activities to clean and transform data at scale, and AWS Glue DataBrew, which allows you to clean data without writing code.

- Schema Optimization

Well-structured data can deliver faster query performance and scalability. This can include everything from denormalization to the use of surrogate keys to support reporting tools. When looking for software tools to help with this step, Azure Synapse Analytics can support performance tuning. Additionally, Amazon Redshift uses sort keys, distribution keys, and compression encoding for optimal performance.

- Data Compression and Partitioning

We know data optimization can help you lower storage costs. How is this? A lot of it comes down to this step. By reducing file sizes and organizing data into partitions, you can cut down storage costs and speed up retrieval. Cloud-native tools include Azure Data Lake Storage Gen2, which supports hierarchical namespaces and fine-grained access control for efficient data organization, and Amazon S3 + Glue Catalog, which uses partitioned Parquet or ORC files for high-performance querying.

- Aggregation and Materialized Views

Saving your team time can make a big impact on your organization. Instead of recalculating metrics every time, you can precompute and store common aggregations. Use materialized views for reporting dashboards while aggregating high-volume data into summary tables. Cloud-native tools include Azure SQL Database, Amazon Redshift, and Aurora

- Automated Data Optimization With AI Tools

Most modern platforms today include built-in intelligence to help your team optimize processes such as:

-

- Query plan analysis and auto-indexing

-

- Workload management recommendations

-

- Anomaly detection in pipeline execution

Tools you can leverage for this step include Azure Advisor, Amazon Redshift, and AWS Compute Optimizer.

Choosing the Right Data Optimization Tools

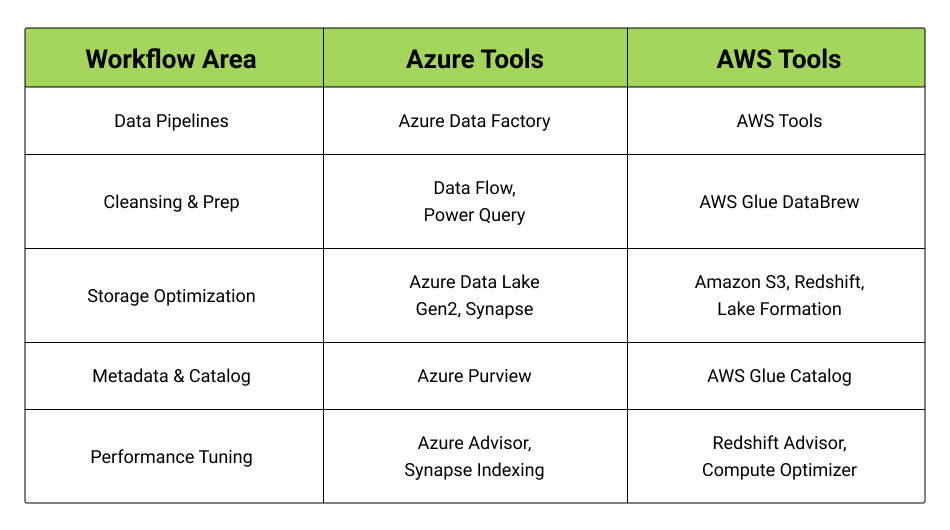

Selecting the right mix of cloud-native tools can make or break your data optimization strategy. The key is to align your tools with your team’s data stack, capabilities, and workflow.

These cloud-native tools integrate deeply into their respective ecosystems, enabling seamless orchestration, monitoring, and optimization at scale.

How SOLTECH Helps Clients Optimize Their Data

Looking for data optimization examples? Let’s start with our partnership with Rinnai America Corporation. The organization’s poorly optimized data tools forced its team to perform manual data management, which was very time-consuming. After working with SOLTECH, Rinnai streamlined its data tools, empowering its sales team to gain real-time updates on products and pricing.

Another SOLTECH client, Haggai International, needed a way to centralize its data. Our Salesforce integration allowed the organization to easily capture its data to be accessible throughout its many teams.

With how quickly things can shift in your industry, it’s critical to consistently assess and optimize your current architecture and pipelines. If you want to learn more about how we can help your organization maximize its data optimization, learn more about our unique process. You can also contact us to further discuss your team’s data needs.

FAQs

What is data optimization?

Data optimization is the process of boosting your organization’s data quality to enhance decision-making across cloud or on-premise systems.

Why is data optimization important for businesses?

Having an efficient data strategy in place can help your organization achieve faster insights, lower cloud costs, and more accurate reporting.

What are some common data optimization techniques?

Common techniques for optimizing your organization’s data include data cleaning, schema optimization, compression, partitioning, and using materialized views.

Thayer Tate

Chief Technology Officer Thayer is the Chief Technology Officer at SOLTECH, bringing over 20 years of experience in technology and consulting to his role. Throughout his career, Thayer has focused on successfully implementing and delivering projects of all sizes. He began his journey in the technology industry with renowned consulting firms like PricewaterhouseCoopers and IBM, where he gained valuable insights into handling complex challenges faced by large enterprises and developed detailed implementation methodologies.

Thayer is the Chief Technology Officer at SOLTECH, bringing over 20 years of experience in technology and consulting to his role. Throughout his career, Thayer has focused on successfully implementing and delivering projects of all sizes. He began his journey in the technology industry with renowned consulting firms like PricewaterhouseCoopers and IBM, where he gained valuable insights into handling complex challenges faced by large enterprises and developed detailed implementation methodologies.

Thayer’s expertise expanded as he obtained his Project Management Professional (PMP) certification and joined SOLTECH, an Atlanta-based technology firm specializing in custom software development, Technology Consulting and IT staffing. During his tenure at SOLTECH, Thayer honed his skills by managing the design and development of numerous projects, eventually assuming executive responsibility for leading the technical direction of SOLTECH’s software solutions.

As a thought leader and industry expert, Thayer writes articles on technology strategy and planning, software development, project implementation, and technology integration. Thayer’s aim is to empower readers with practical insights and actionable advice based on his extensive experience.